Using Computational Methods to Make Art, and to Break Art History – Benjamin C. Tilghman

There is an inherent irony to the fact that advances in computing are revolutionizing our understanding of medieval manuscripts, but it’s perhaps not all that surprising. For one, there’s the simple (and clichéd but no less profound) fact that advances in computing are revolutionizing our understanding of just about everything in our world. But there’s also a deeper resonance in that manuscripts represent the most sophisticated information technology of their time, and many of them present their data so thickly that it is only through computational methods that modern scholars have been able to recover it. It is, I think, no accident that medievalists were some of the very earliest developers of what’s come to be known as digital humanities, first pursuing such work in 1949 and publishing digital resources since the 1960s.

As a scholar and a teacher, I have become absolutely dependent on digital technologies, but I must confess that I have done so with little reflection: everything from word processing programs to image manipulation software have enabled the work I do, but I haven’t thought much about how they fundamentally change it. The Computational Visual Aesthetics workshop shifted my thinking in this regard, towards seeing the potential of digital processes as an open-ended heuristic as much as a means to an end.

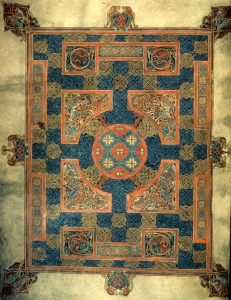

For the workshop, I shared some of my ongoing research on the processes by which the famously intricate carpet pages and other interlace designs in manuscripts such as the Lindisfarne Gospels and the Book of Kells were made. Previous work by Robert Stevick, George Bain, and Derek Hull has uncovered some of what we might call the source codes for early medieval ornament. My focus recently has been taking these fascinating results and speculating about how the makers of these objects–mostly if not exclusively monks–might have thought about the geometric processes they were using. How might they have theorized the emergent processes that took them from a basic set of lines and grids to compositions that challenge the perceptual abilities of the human eye, and the comprehension of the mind? Might they perhaps have seen some sort of divine hand at work, forming these designs alongside them? I argue that they did, and that the displacement of artistic agency away from the human maker towards divine geometric processes would have enhanced the power and numinousness of the objects. There may even have been a sense of propriety at work: for religious objects like stone crosses, liturgical vessels, and gospel books, geometry may have been perceived as the visual form most fitting for objects of religious transcendence.

Such questions of meaning are where my mind naturally goes when considering works of art, and I have to confess that the specific geometric processes reconstructed by Bain, Stevick, and Hull hold little interest for me in of themselves. But perhaps then I’m missing the point: is it possible for me to understand computational art if I’m not particularly interested in thinking computationally? How do my habits of thinking, and seeing, match up to the works and those who made them?

What we perceive in works of art is predicated necessarily on what we expect to perceive in works of art, or perhaps, what we think we are supposed to perceive.[1] In early medieval art, many of the intricate and seemingly abstract patterns we see in manuscripts and on objects were consciously created to produce puzzling images that can be perceived only through careful looking and even some manipulation within the mind’s eye. That is to say, beholders were expected to approach these images not so much as given visual phenomena, but as problems to be solved (shameless self-promotion–you can read my essay about this strain of visuality here). As the discussion developed in the workshop, particularly in the light of Chris and Adriana’s papers, the group became more interested in these volitional aspects of seeing: if we are going to teach computers to see, we have to think carefully about what they are supposed to look for, which demands that we think with greater clarity about what it is we look for in an artwork and how we look for things in artworks. One of the things we circled around were the lexicons used by computer scientists to tag and analyze works of art. Whether describing the particular objects within a work, or the aesthetic of the work as a whole, those lexicons in many ways might be determining the shape of the answers before the questions are even asked. What we look for is shaped in part by what we identify as possible subjects ahead of time. We’ll only see what we think it is possible to see.

That got me wondering how we might get computers to help us rethink what it is possible to see. Might computational seeing serve as a way of productively “breaking” the art historical process? At one point, Alison quickly described how text-mining programs can produce a suggestive grouping of words out of a large data set, but it is still up to the humanist to make some sort of sense of those groupings. A word string has only the semantic valences that we perceive in it, just as a complex figure of interlacing lines contains only the patterns we see in it. I’ve had some good fun playing with Voyant Tools to get a better sense of how this works. I wondered if it would be possible to do that with images: if we tasked some image processing software to parse a set of images and present us with subsets of objects within those images, would we be able to make sense of those subsets? That is to say, would a computer program see the same similarities among visual data that we see? Or might the image set be nonsensical to art historians? Perhaps it would sensible, but also kind of boring: if, for example, the program might return a pile of triangular shapes that it found in a group of paintings, and we might shrug our shoulders and say, yup, there’s a bunch of triangles. But a seeming negative result such as that might actually be fruitful as a heuristic tool for bringing greater clarity to the volitional seeing of art historians. What are we looking for? Why do we feel that those are the things to look for? We speculated on the possibilities for an exercise (a “locked-room” experiment, as Alison put it) in which art historians would commit in advance to write a paper based on the results of an image-mining assay, no matter what turned up. How would art historians handle such a challenge? If we found ourselves with nothing to say, what might that tell us about our discipline?

In an interview about his brilliant late-70s collaborations with Brian Eno, David Bowie described how they found the greatest inspirations by misusing–even abusing–the new synthesizers of the time. Rather than using the traditional sounds that came pre-programmed in the machines, Bowie and Eno sought out new sounds that would lead them to make new music. Computational seeing, I think, offers a similar opportunity: with a little creative misuse, perhaps visual analysis programs could point us towards new art histories.

[1] As an aside, I think it’s this latter phenomenon–the feeling that one has to perform a certain kind of perception–that creates such a blockage for many people when confronted with modern and contemporary art. They have the intuition that they’re supposed to be seeing complex philosophy, or incisive cultural critique, or arch irony, and they aren’t sure how to do that.

Benjamin C. Tilghman is assistant professor of Art History at Lawrence University in Wisconsin, and a member of the Material Collective, a collaborative working group of medieval art historians. He is the author of essays on writing and ornament in the Book of Kells and related manuscripts, on materiality and enigma in Anglo-Saxon art, and on the meditative qualities of miniature drawings in late Renaissance art. Previously, he served as curatorial fellow at the Walters Art Museum, where he organized exhibitions on miniature books, the Saint John’s Bible, and images from the Hubble Space Telescope.C.